Robotic Vision and Action in Agriculture: the future of agri-food systems and its deployment to the real-world

Link to the workshop page

This workshop will bring together researchers and practitioners to discuss advances in robotics applications and the intersection of these advances with agricultural practices. As such, the workshop will focus not only on recent advancements in vision systems and action but it will also explore what this means for agricultural practices and how robotics can be used to better manage and understand the crop and environment.

Motivation and Objectives

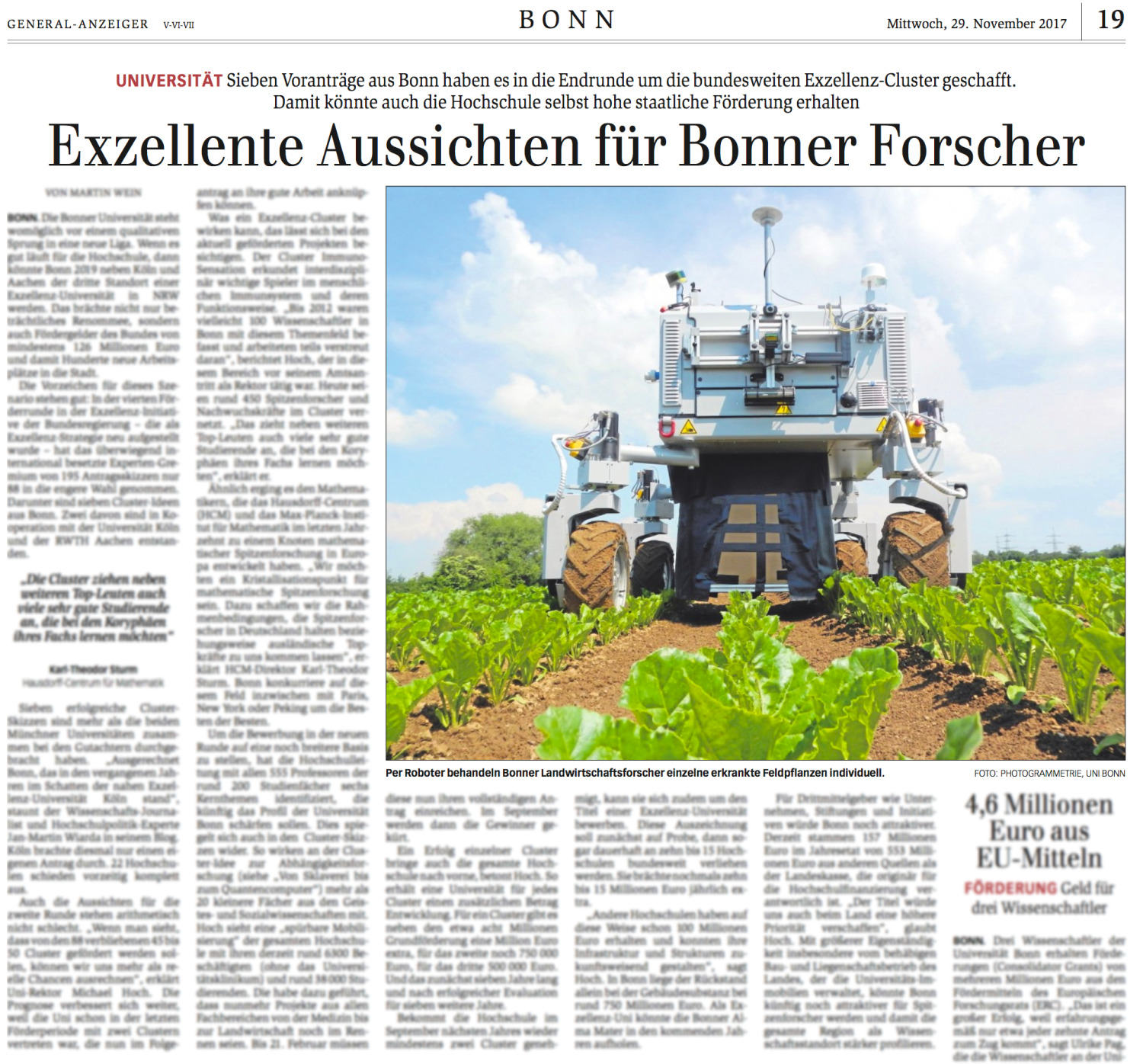

Agriculture robotics faces a number of unique challenges and operates at the intersection of applied robotic vision, manipulation and crop science. Robotics will provide a key role in improving productivity, increasing crop quality and even enabling individualised weed and crop treatment. All of these advancements are integral for providing food to a growing population expected to reach 9 billion by 2050, requiring agricultural production to double in order to meet food demands.

This workshop brings together researchers and industry working on novel approaches for long term operation across changing agricultural environments, including broad-acre crops, orchard crops, nurseries and greenhouses, and horticulture. It will also host, Prof. Achim Walter, an internationally renowned crop scientist who will provide a unique perspective on the far future of how robotics can further revolutionise agriculture.

The goal of the workshop is to discuss the future of agricultural robotics and how thinking and acting with a robot in the field enables a range of different applications and approaches. A particular emphasis will be placed on vision and action that works in the field by coping with changes in appearance and geometry of the environment. Learning how to interact within this complicated environment will also be of special interest to the workshop as will be the alternative applications enabled by better understanding and exploiting the link between robotics and crop science.

List of Topics

Topics of interest to this workshop include, but are not necessarily limited to:

-

Novel perception for agricultural robots including passive and active methods

-

Manipulators for harvesting, soil preparation and crop protection

- Long-term autonomy and navigation in unstructured environments

- Data analytics and real-time decision making with robots-in-the-loop

- Low-cost sensing and algorithms for day/night operation, and

- User interfaces for end-users

Invited Presenters

The workshop will feature the following distinguished experts for invited talks:

Prof. Achim Walter (ETHZ Department of Environmental Systems Science)

Prof. Qin Zhang (Washington State University)

Organisers

Chris McCool

Australian Centre of Excellence for Robotic Vision

Queensland University of Technology

c.mccool@nullqut.edu.au

Chris Lehnert

Australian Centre of Excellence for Robotic Vision

Queensland University of Technology

c.lehnert@nullqut.edu.au

Inkyu Sa

ETH Zurich

Autonomous Systems Laboratory

inkyu.sa@nullmavt.ethz.ch

Juan Nieto

ETH Zurich

Autonomous Systems Laboratory

jnieto@nullethz.ch

Cyrill Stachniss

University of Bonn

Photogrammetry, IGG

cyrill.stachniss@nulligg.uni-bonn.de