KDnuggets reports on our work on plant classification using neural networks.

Author: stachnis

2017-10: Successful Flourish P2 Review Meeting at Campus Klein Altendorf

The Flourish P2 review meeting was successfully conducted on October 11 and 12 at the Campus Klein Altendorf of the University of Bonn, including demonstrations with the BoniRob ground vehilce and unmanned aerial vehicles, and received an excellent evaluation.

2017-09-29: Cluster of Excellence Proposal “PhenoRob” reaches the next round

We are all super excited that our Cluster of Excellence Proposal “PhenoRob – Robotics and Phenotyping for Sustainable Crop Production” makes it to next round.

2017-09: Work on Semi-Supervised Crop-Weed Detection becomes IROS 2017 Best Application Paper Finalist

The work “Semi-Supervised Online Visual Crop and Weed Classification in Precision Farming Exploiting Plant Arrangement” by Philipp Lottes and Cyrill stachniss, presented at IROS 2017 in Vancouver, becomes IROS 2017 Best Application Paper Finalist.

Abstract – Precision farming robots offer a great potential for reducing the amount of agro-chemicals that is required in the fields through a targeted, per-plant intervention. To achieve this, robots must be able to reliably distinguish crops from weeds on different fields and across growth stages. In this paper, we tackle the problem of separating crops from weeds reliably while requiring only a minimal amount of training data through a semi-supervised approach. We exploit the fact that most crops are planted in rows with a similar spacing along the row, which in turn can be used to initialize a vision-based classifier requiring only minimal user efforts to adapt it to a new field. We implemented our approach using C++ and ROS and thoroughly tested it on real farm robots operating in different countries. The experiments presented in this paper show that with around 1 min of labeling time, we can achieve classification results with an accuracy of more than 95% in real sugar beet fields in Germany and Switzerland.

2017-09: Code Available: MPR: Multi-Cue Photometric Registration by B. Della Corte, I. Bogoslavskyi, C. Stachniss, and G. Grisett

A General Framework for Flexible Multi-Cue Photometric Point Cloud Registration by Bartolomeo Della Corte, Igor Bogoslavskyi, Cyrill Stachniss, and Giorgio Grisetti

MPR: Multi-Cue Photometric Registration is available on GitLab

The ability to build maps is a key functionality for the majority of mobile robots. A central ingredient to most mapping systems is the registration or alignment of the recorded sensor data. In this paper, we present a general methodology for photometric registration that can deal with multiple different cues. We provide examples for registering RGBD as well as 3D LIDAR data. In contrast to popular point cloud registration approaches such as ICP our method does not rely on explicit data association and exploits multiple modalities such as raw range and image data streams. Color, depth, and normal information are handled in an uniform manner and the registration is obtained by minimizing the pixel-wise difference between two multi-channel images. We developed a flexible and general framework and implemented our approach inside that framework. We also released our implementation as open source C++ code. The experiments show that our approach allows for an accurate registration of the sensor data without requiring an explicit data association or model-specific adaptations to datasets or sensors. Our approach exploits the different cues in a natural and consistent way and the registration can be done at framerate for a typical range or imaging sensor.

This code is related to the following publication:

“A General Framework for Flexible Multi-Cue Photometric Point Cloud Registration” by Bartolomeo Della Corte, Igor Bogoslavskyi, Cyrill Stachniss, Giorgio Grisetti. Submitted to ICRA 2018 and available on arXiv:1709.05945.

2017-09: Successful UAV-g 2017 Conference held in Bonn

Between September 4 and 7, 2017 around 180 researchers from over 25 different countries attended the UAV-g conference held in Bonn. The conference featured 1 workshop/tutorial day and 3 days of technical program including two invited speakers. All papers are now online at the UAV-g 2017 Website.

2017-08: Olga Vysotska receives a Google Award for the ACM womENcourage 2017

Olga Vysotska receives a Google Award for the ACM womENcourage 2017 in Barcelona in September 2017. The award is given through the European ACM-W committee (ACM-EW). The ACM-WE vision is a transformed European professional and scholarly landscape where women are supported and inspired to pursue their dreams and ambitions to find fulfillment in the computing field.

2017-06: Code Available: Online Place Recognition by Olga Vysotska

Online Place Recognition by Graph-Based Matching of Image Sequences by Olga Vysotska and Cyrill Stachniss

Online Place Recognition is available on GitHub

Given two sequences of images represented by the descriptors, the code constructs a data association graph and performs a search within this graph, so that for every query image, the code computes a matching hypothesis to an image in a database sequence as well as matching hypothesis for the previous images. The matching procedure can be perfomed in two modes: feature based and cost matrix based mode. For more theoretical details, please refer to our paper Lazy data association for image sequence matching under substantial appearance changes.

This code is related to the following publication:

O. Vysotska and C. Stachniss, “Lazy Data Association For Image Sequences Matching Under Substantial Appearance Changes,” IEEE Robotics and Automation Letters (RA-L)and IEEE International Conference on Robotics & Automation (ICRA), vol. 1, iss. 1, pp. 1-8, 2016. doi:10.1109/LRA.2015.2512936.

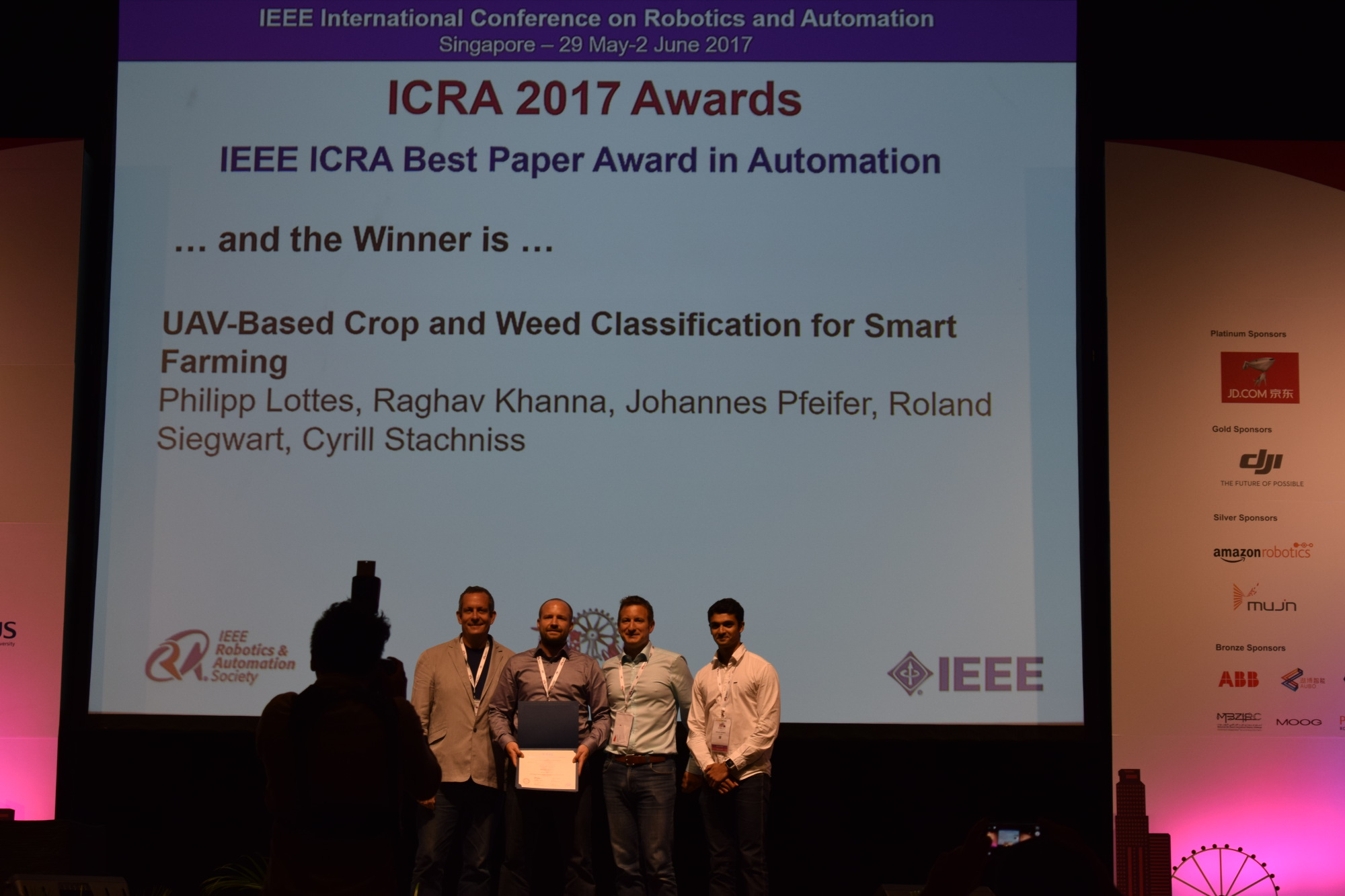

2017-06: “UAV-Based Crop and Weed Classification for Smart Farming” Receives the Best Paper Award in Automation of the IEEE Robotics and Automation Society at ICRA 2017

The work “UAV-Based Crop and Weed Classification for Smart Farming” by Philipp Lottes, Raghav Khanna, Johannes Pfeifer, Roland Siegwart and Cyrill Stachniss received the Best Paper Award in Automation of the IEEE Robotics and Automation Society at ICRA 2017.

Abstract: Unmanned aerial vehicles (UAVs) and other robots in smart farming applications offer the potential to monitor farm land on a per-plant basis, which in turn can reduce the amount of herbicides and pesticides that must be applied. A central information for the farmer as well as for autonomous agriculture robots is the knowledge about the type and distribution of the weeds in the field. In this regard, UAVs offer excellent survey capabilities at low cost. In this paper, we address the problem of detecting value crops such as sugar beets as well as typical weeds using a camera installed on a light-weight UAV. We propose a system that performs vegetation detection, plant-tailored feature extraction, and classification to obtain an estimate of the distribution of crops and weeds in the field. We implemented and evaluated our system using UAVs on two farms, one in Germany and one in Switzerland and demonstrate that our approach allows for analyzing the field and classifying individual plants.

2017-05: Code Available: High-Speed Depth Clustering Using 3D Range Sensor Data by Igor Bogoslavskyi (Updated)

Depth Clustering by Igor Bogoslavskyi and Cyrill Stachniss

Depth Clustering is available on GitHub

We released our code that implements a fast and robust algorithm to segment point clouds taken with Velodyne sensor into objects. It works with all available Velodyne sensors, i.e. 16, 32 and 64 beam ones. See a video that shows all objects which have a bounding box with the volume of less than 10 qubic meters:

This code is related to the following publications:

I. Bogoslavskyi and C. Stachniss, “Efficient Online Segmentation for Sparse 3D Laser Scans,” PFG — Journal of Photogrammetry, Remote Sensing and Geoinformation Science, pp. 1-12, 2017.

as well as

I. Bogoslavskyi and C. Stachniss, “Fast Range Image-Based Segmentation of Sparse 3D Laser Scans for Online Operation”, In Proceedings of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS) , 2016.